“i” stands for “interlaced” and “p” for “progressive”

There are technical as well as aesthetic differences between the two. Progressive is easier to grasp.

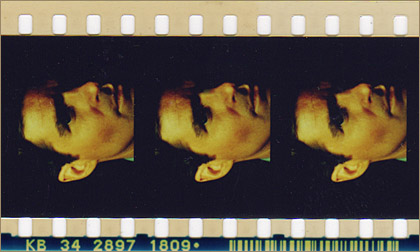

In progressive format each frame is intact, discreet and represents one sample in time. When you freeze a progressive frame it looks clean much the same way a frame of motion picture film would. In fact the analogy with film goes a step further. Some progressive formats are shot at roughly 24 frames-per-second (fps) making a migration from tape to film a relatively straightforward process as far as temporal issues are concerned.

Interlaced formats mimic SD video. Each frame displays two fields when frozen. Fields represent two discreet samples in time. Any fast motion in frame will render the two fields clearly distinct. The fields are interlaced together like crossed fingers of two hands but not until they reach the display and sometimes not until they reach our brains. Interlaced video at 29.97fps in North America (sometimes erroneously labeled NTSC) displays 59.94 interlaced fields per second.

The difference between the two is obvious to a casual viewer although he or she may not be able to describe it well. Interlaced video in North America has roughly 2.5 times finer rendition of motion than progressive video shot at 23.98fps. Ironically, this advantage is what most people discount as “non-cinematic” look of video.

We are culturally conditioned to accept the look of 23.98fps as the look of dramatic narrative entertainment. Interlaced look is the one we often associate with immediacy of TV news or sports telecasts.

Your network will have the final say on “i” or “p.” It is very important to determine the delivery HD standard before choosing cameras. While it is easier to convert from progressive material to interlaced, conversions from interlaced to progressive generally lack quality and may not be acceptable by your network.